Note: One of the things this post is desperately missing is a definition of identity as it's used in the later, more speculative half. Umm, maybe later.

Kazys emails:

My sense is that if there is anything optimistic it is to recall that the web went roughly...

1. rough html -> 2. static sites by designers -> 3. flash and information architecture (parallel streams) approaches -> 4. template driven design hooked to massive databases (even for personal sites)/web 2.0 cross-site interactivity.

I see architecture at being at best in stage 3 (if not in stage 2) of this. If we can precipitate a stage 4, then I think things will be interesting.

This three line history of the web is instructive because it highlights the critical tectonic changes that have allowed the web to grow immensely in size and richness. As the methods of producing websites have evolved there has been a steady move away from the decoration of information and towards the handling and processing of large amounts of content.

Faced with an explosion in the consumer popularity of the web (and thus the amount of content it contained) about a decade ago, the response was to impose order through structure and organization. This saw the rise of information architecture, a problematic term in this context but one that aspires to literally structure information and interactions as clearly as possible for the user. The imperative to continuously integrate larger and larger data sources and to offer more complex interactions has brought with it specialization in terms of storing and processing information. This has created web applications which differ from websites in their ability to perform tasks as well as display information. Hotmail is an example of an early web application. Issues of user-centered design are still critical but now there is also a need to recognize the web as a thing and simultaneously its own infrastructure. A realization that brings with it implications on the interoperability between sites, standardization, and the 'typology' of websites.

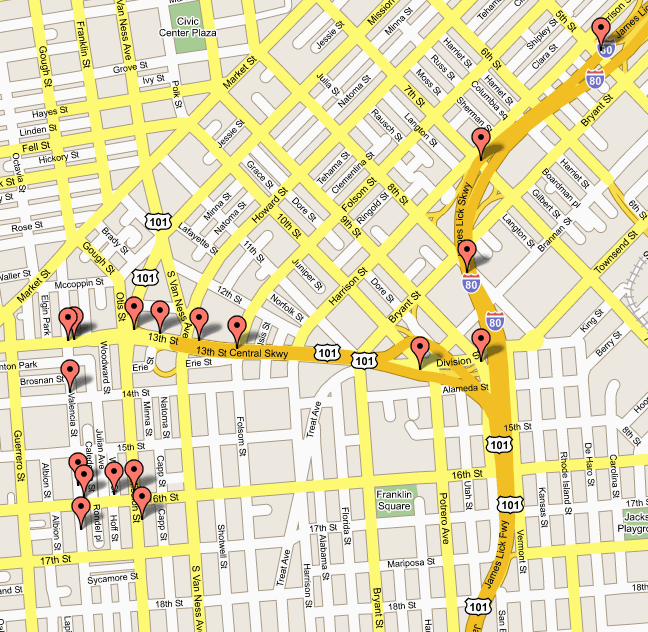

Google Maps, for instance, is accepted as the central resource for maps. To end users it's quite straight forward: Google Maps offers the distribution of maps and some spatial data. However, due to the quantity and specificity of this information it does not make sense for each website that needs a map to maintain their own cartographic resources. In response, the web has adopted what's called an Application Programming Interface (API) model which turns a web application into infrastructure for other applications. The API is a predefined menu of machine-to-machine interactions that a web application offers to the world. Thus, while Google may offer you just a map it offers web applications the ability to "borrow" its resources to process and retrieve arbitrary spatial data.

For instance, an API is what allows Apple and AT&T to use Google Maps in the iPhone without duplicating all of the cartographic resources that Google maintains and without simply re-directing users to Google. This last point is quite important: an API is used behind the scenes. It allows an eponymous website to offer information services that it itself would never have the capability to deliver.

In the near future we are likely to witness an increasing amount of websites which are little more than a wrapper or bundler of multiple APIs- using the potential of sites like Google, Flickr, Amazon and others to release something new into the world. While the API may certainly be a commercial vehicle, it's also a profound public gesture that has spawned an entire subculture of mashups devoted to building new tools around these publicly-available resources. As more and more websites make use of the many APIs available the web melts ever more together.

This means that the web paradoxically is serviced by a limited number of infrastructural sources (concentration of resources) and that services built upon this infrastructure are increasingly defined by the value that they add to that infrastructural baseline (differentiation of individuals). In other words, adding new content to a system already bursting at its seams is of little value. New potentials- new mechanisms for processing- must be introduced to create new effects. The implicit truth of the knowledge economy is that once information has been commodified, competition must occur on the basis of recombinant capabilities.

Let me now attempt to reclaim the title of the architect by making this discussion relevant to the designer of buildings and cities. The progression that Kazys outlines above finds its parallel in the design of buildings with something like this (though stage 4 as defined here is still problematic, I think):

1. Functional shelter (construction doc)

2. Shelter & aesthetics (sketch)

3. Shelter & aesthetics & instrumental organization (diagram)

4. Shelter & aesthetics & organization & processing (prototype, scenario?)

To the architect this begs the question, "how can buildings process (things)?" The IA crowd loves to cite Stewart Brand's How Buildings Learn but his assertion that buildings "learn" by being violently reconfigured/retrofitted is a somewhat dismal prospect. While the recursive rebuilding of a shelter's guts allows it to adapt, the building is not learning. It's allowing the architect to learn from it. The book spiral of OMA's Seattle Public Library was designed to adapt to changes in its core program of organizing objects in space but this too is short of actual processing. Cedric Price's Generator proposal perhaps comes closest by using information processing and robotics to allow an artificial intelligence to reconfigure spaces but the result is a shell game: identical cubes of space tossed around in a slippery illusion. Is there any there there?

If the "processing" in question is similar to that enabled by an API we have to ask what are the inputs and outputs. Is the input program? Judging by the number of studio briefs requiring students to marry two or more unlikely functions into one building, program has had its vogue. If program is the input, then an architecture of multiple programs becomes a processing machine to create something new by symbiotically connoting its inputs. The combination of a private residence, architectural office, and museum collection in Sir John Soane's House & Museum, for instance, forced all three into a unique spatial relationship which required specific developments in plan, section, and the detailing of the building. If the endgame is merely hyper-specific combinations of program we've lost the potential- the agility- of the API.

Perhaps just as important what's being processed is the question of where this processing is occurring. I'm willing to accept that the API as a model for architecture contributes less to the design of individual buildings than to the function of the city, but it should effect both. The API, after all, is a form of structured communication and would seem to impact both the identity and programming of individual buildings, if not more. If Google Maps exists concurrently as an eponymous website and, through its role as a piece of the web infrastructure, an active part of many other websites then a similar logic should hold for API architecture. Rather than a synthetic project in the modernist sense where multiple systems are resolved into a singular entity, this new synthesis should allow multiple systems / programs / things(?) to exist simultaneously as individual and interwoven elements. The constant struggle between these chunks for preferential position in defining the identity of the project could go a long way towards producing a motivated mood or atmosphere: affect as a catalyst of effect.